The Principles Office

A look at the Machiavellian machinations behind formal documents of military ethics for computers with guns.

This is a story about a story I did not write.

In February 2020, the Department of Defense announced that it had unveiled a set of principles for how it was going to use Artificial Intelligence. It was, at least as the military intended it, to be the culmination of a process set off by a worker revolt at Google in 2018. After workers at the technology giant learned the company was contracted to build an image-processing AI for the military, those workers made their objections known and organized against it.

Project Maven, as both the contract and the labor dispute are known, was built on top of open-source software that Google engineers worked on. It was a kind of computer vision analysis, used to process hours and hours of video and find common objects, in design as the first step in a process that would have more and more human involvement further down the line. It was, generously, a weapons-adjacent program.

In response to the worker action, Google announced its own AI principles. The Defense Innovation Board, a body set up to coordinate and manage how the Pentagon worked with the modern technology sector, set about to create its own set of guidelines for Artificial Intelligence.

I do not know the full story of what happened between the start of the Board’s work and the end. What I have instead is the visible text of the final principles, and the draft of the principles in October. It is two points, the course through which has to be inferred, but the direction is clear.

Here, for example, is how the second principle, “Equitable,” reads in final form:

Equitable: The department will take deliberate steps to minimize unintended bias in AI capabilities.

And here is how that same principle read in draft:

Equitable: DoD should take deliberate steps to avoid unintended bias in the development and deployment of combat or non-combat AI systems that would inadvertently cause harm to persons.

That is, to say the least, quite a reduction in specificity.

(A full list of the principles as drafted and principles as adopted is available here. The text of the adopted principles comes from the Pentagon’s own announcement, while the draft principles are still available here. I recommend this DefenseOne story, too, for some insight into all this.)

There is, illuminating at least a little of what happened, a full 76 page document of sourcing that went into drafting these principles, into outlining why the wording of the draft worked, and what it prohibited.

Here, again under equitable, is part of the reasoning for how the Pentagon should think about balancing privacy, harm, and bias in its applications (citation from original):

The reliance on inappropriate historical data for the Idaho Medicaid tool is noteworthy, as many AI decision-aids or tools will undoubtedly rely on exactly this type of information. For the Department, use of such historical data in personnel decisions, such as billeting or promotions, will likely draw from such sources. It is therefore incumbent that equitability act as a guiding principle in the development and deployment of such systems, and that DoD performs appropriate checks for unwanted bias.

Promotions and housing aren’t the most exciting topics, but they are ones where AI error (and function!) can happen at a massive scale for lots of people. This matters out of combat, and it matters especially in combat.

Again, from the supporting document:

Specifically, DoD should have AI systems that are appropriately biased to target certain adversarial combatants more successfully and minimize any pernicious impact on civilians, non-combatants, or other individuals who should not be targeted

These are also, as you may note, requirements under the laws of war. While the specific treaties and rules for lethal autonomous weapons remain subject to ongoing debate in the international community, specifying that AI be designed with the intent to follow the laws of war seems like a bare minimum baseline for any nation paying companies to design and build thinking machines for battle.

What is fascinating for anyone writing in this space, and which has certainly informed my coverage going forward, is the gulf between the draft and the adopted principles. The Defense Innovation Board specifically hired experts in the field, people familiar with the debate, laws of war, and with how the military saw its role in the world, to come up with these rules, and to come up with a public-facing justification for them.

That each principle changed between public debut and Pentagon adoption, and each changed to be more permissive, strongly suggests dissatisfaction or pressure or discomfort with the draft. It is hard to not be cynical about what that pressure meant.

It also means that, as much as the Pentagon and Google hoped to have Principles of AI Ethics as the end of the story, they are instead just part of a slowly unfolding picture of what it means to build, buy, and use artificial intelligence for the military.

Project Maven’s coda is that Google subcontracted the work out. The Pentagon’s AI coda is that the draft principles earnest attempt to meet workers at their word got watered down into a document that functionally lacks even the wording for self-imposed restraint.

It means, too, that this fight over how code gets used against people will only continue as more and more parts of the government adopt AI for daily functioning.

On July 23rd, the Office of the Director of National Intelligence rolled out AI principles for the whole of the United States spy apparatus. This time, there was no previewed draft, and only a modest supporting framework, instead of a lengthy supporting document. Aware that this is not the end of the process, but likely just a start, ODNI cheekily labeled the principles Version 1.0.

WITH A BANG AND A BLACKOUT

Every so often, publications like The Hill run an op-ed with a title like “China's surprise, years in the planning: An EMP attack.” The story is about nuclear war, but it tries very hard to convince readers that it is instead about a special kind of war with nuclear weapons, one that only the United States can lose.

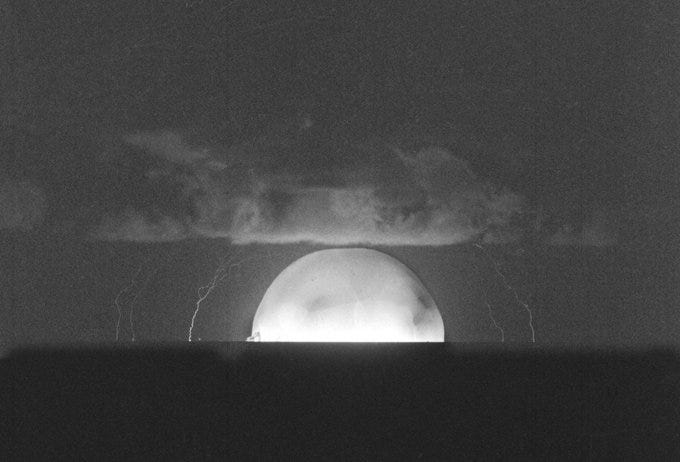

Extrapolating from both what is known and what is theorized about high-altitude nuclear explosions, this kind of story presupposes that despite nearly 75 years of nuclear deterrence keeping an uneasy lib on all-out war between nuclear-armed nations, a sneak attack might let a nation just Leeroy Jenkins its way into incapacitating the full force of the United States with a nuke-caused electromagnetic pulse attack.

The face of this imagined reckless foe has changed, from Russia in the 1980s, to particularly well-off and well-equipped terrorists in the 1990s and 2000s, to North Korea or China in the 2010s and 2020s. It has remained a weird angle at every turn, because it imagines someone in possession of a nuke gambling on a weird side-effect rather than the known horror of a nuclear blast.

For Foreign Policy, I wrote about keeping the nuclear war part of the story at the foreground. As I said in the story:

Why, after obtaining a nuclear weapon, would they not simply use the blast to kill hundreds of thousands of people directly? [Representative Franks] argued [in 2012] that the American dependence on modern technology poses a unique vulnerability enemies might exploit, unlike the common vulnerability every human being has to their flesh being disintegrated by fire and heat.

I’ve said before that Americans, culturally and institutionally, downplay the horrors of nuclear war, to our own and everyone else’s detriment. Which is why the EMP crowd is so weird to me: they clearly fear a war between major powers, but the policy recommendations that come from it are all over the place, and very rarely involve resilient infrastructure.

Since the Trinity test, scientists have hardened equipment against EMP blasts. The techniques are known and have been iterated upon for decades, involving everything from concerns of nuclear fratricide to testing aircraft safety against smaller EMPs at a massive all-wood structure just outside Albuquerque, New Mexico. EMPs, as a technical problem, are about infrastructure, and it’s the same kind of infrastructure changes that could likely preserve modern electrical life in the face of another Carrington Event, or a solar storm so massive it reaches earth.

I have few illusions about the quality of life for the weary survivors of the world’s first nuclear war. I put far more stock in people working to endure natural disaster, even cosmic ones. I wish, so very much, that the EMP lobby took all the energy towards fear of nuclear sneak attacks, and instead devoted those same efforts into urging Carrington Event resiliency.

READING LIST: DAYS OF LEDE

A particularly strange part about having a beat focused on military technology is the comfort developed with describing war as “a thing that happens over there.” This framing, often an inherited rhetorical device, means developing a full vocabulary of euphemisms about state-directed violence abroad, designed to distinguish and contrast from some kind of peace at home. The United States has formally been at war for nearly 19 years abroad, under rules passed in September 2001. (Longer, counting a whole bunch of other kinds of action).

It has also, from the start, been home to a host of violence within and about the polity, determining through force of arms, and retention of arms, who gets to be part of the state and who is its target.

This fortnight, I read Albuquerque: Openly Racist Anti-Quarantine Protests Attract Militia, Conflict With BLM by Bella Davis. An Albuquerque-based journalist also writing at The Daily Lobo, Davis has the keenest eye for what, actually, is happening as militias mix with local parties and host rallies for the right to recklessly endanger others.

Also worth reading is Lauren McGaughy’s account of the killing of Garrett Foster, a protestor who a driver fatally shot in Austin on July 25th. The shooter drove into the crowd, a tactic common among people trying to physically disrupt the ongoing Black Lives Matter protests and marches. The stakes of who gets to be a person on the streets, of who the law it protects and who it is weaponized against, are clear and real.

NEWSLETTER OF OUR DISCONTENTS

I’d be remiss if I didn’t end this issue of Wars of Future Past without mentioning that I am now part of Discontents, a substack collective designed to bring together writers across a range of subjects into one multifaceted publication. Once upon a time, newspapers and magazines did this lucratively. While all of journalism is in a rough place, and as writers move to newsletters in response, readers should still get to experience the thrill of stumbling across fascinating writing by simple virtue of proximity. As a starter, I recommend Kim Kelly’s Be The Spark and Derek Davison’s Foreign Exchanges.

That’s all for this fortnight. Questions, comments, or reading recommendations, please, email my way! Thank you all for reading, I cannot tell you how much I missed writing newsletters like this